The Intelligent Data Platform

EIMetrixs Core Technology

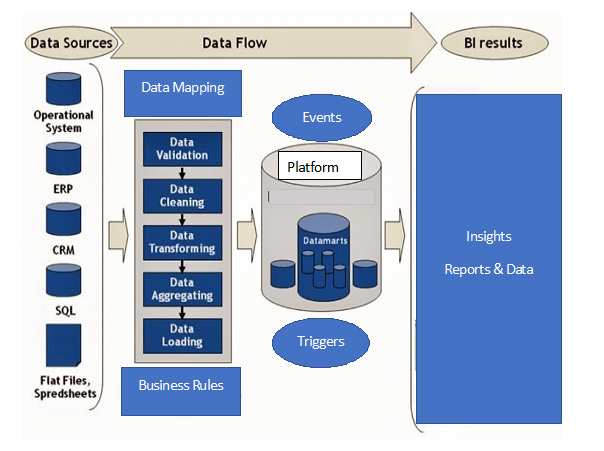

IDP Process Flow Diagram

The IDP Process Flow consists of extracting data from the identified data sources, applying the various data flow analysis processes and generating the business intelligence or Insights.

During extraction, the desired data identified can be extracted from many different sources, including database systems and applications. Depending on business rules or industry-specific data extracted or used, various data transformation such as data mapping, validations, cleaning, transforming, aggregating and loading are processed.

Subsequent Metadata values are stored that contain dynamic data logic (these are intelligent data-driven system-generated algorithms managed by customer supplied business rules), events and triggers that will generate the business intelligent Insights (Reports and Data).

The Insights can be used to generate and capture value which can lower costs and increase ROI.

- Enterprise-level data integration. Intelligent Data Platform ensures accuracy of data through a single environment for transforming, profiling, integrating, cleansing, and reconciling data and managing metadata.

- Intelligent Data Platform ensures security through complete authentication, granular privacy management, and secure transport of your data in the platform in during the processing.

- Intelligent Data Platform can communicate with a wide range of data sources and move an enormous volume of data between them effectively.

With the Intelligent Data Platform (“IDP”) you can

- Integrate data to provide business users holistic access to enterprise data – data is comprehensive, accurate, and timely.

- Scale and respond to business needs for information – deliver data in a secure, scalable environment that provides immediate data access to all disparate sources.

- Simplify design, collaboration, and re-use to reduce developers’ time to results – unique metadata management helps boost efficiency to meet changing market demands.

- Receive data transformation services based on business intelligence rules, events, triggers, frequencies, data mapping and result driven target isolation.

The IDP Data Extraction Process

Each separate system may also use a different data organization / format. Common data source formats are relational databases and flat files but may include non-relational database structures such as Information Management System (IMS) or other data structures such as Virtual Storage Access Method (VSAM) or Indexed Sequential Access Method (ISAM), or even fetching from outside sources such as web spidering or screen-scraping.

The IDP Data Transformation Process

Some data sources will require very little or even no manipulation of data.

In other cases, one or more of the following transformations types to meet the business and technical needs of the end target may be required:

- Selecting only certain data to load or not load based on rules

- Translating coded values and automated data cleansing

- Encoding free-form values

- Deriving a new calculated value

- Filtering

- Sorting

- Joining data from multiple sources

- Aggregation

- Generating surrogate-key values

- Transposing or pivoting data values

- Dynamic data logic application

- Statistical value generation

- Analytical computation based on business rules, events and triggers

- Preparation for time-blockchain formatting and storage

- Data validation

- Metadata generation

Terminology

The process of inspecting, cleansing, transforming, and modeling data with the goal of discovering useful information, suggesting conclusions, and supporting decision making.

The term data analysis is sometimes used as a synonym for data modeling.

Mapping is the definition of the relationship and data flow between source and target objects. It is a pictorial representation about the flow of data from source to target that involves the application of intelligent qualifiers, refiners and selectors that associate the core data to specific keywords, statements, algorithms and data sets.

These can be used to quickly isolate data elements that are used to generate dynamic data logic, or data that is auto-generated based on certain business intelligence, events and triggers.

It is the process of resolving inconsistencies and fixing the anomalies in source data, typically as part of the transformation process, based on business intelligence and rules.

The data cleansing technology improves data quality by validating, correctly naming and standardizing data.

Target System is a database, application, file, or other storage facility to which the “transformed source data” is loaded in a data warehouse or platform.

Source System is a database, application, file, or other storage facility from which the data in a data warehouse is derived.

Some of them are Flat files, Oracle Tables, Microsoft SQL server tables, COBOL Sources, XML files, etc.

Metadata describes data and other structures, such as objects, business rules, and processes. The schema design of a data warehouse or platform is typically stored in a repository as metadata which is used to generate scripts used to build and populate the data platform, pre and post processed.

Metadata contains all the information about the source tables, target tables, the transformations, events, triggers and time-blockchain information, so that it will be useful and easy to perform transformations and generate the Insights.

Transformation is the process of manipulating data. Any manipulation beyond copying is a transformation. This may include cleansing, aggregating, and integrating data from multiple sources, creation of dynamic data logic, events, triggers and business rules.

A transformation is a repository object that generates, modifies, or passes data.

If your company can benefit from an intelligent, integrated data platform,

EIMetrixs

EIMetrixs provides services which help make the best possible use of data.

EIMetrixs offerings enhance and augment the speed and usability of existing data services within an organization.

Headquartered in the United States of America, we serve clients globally.

© EIMetrixs LLC.